On-Call Credos

I've been in on-call rotations for more than a decade. When computers I manage have a problem, they page my team 24/7 for help. I use these credos so being on-call goes as smoothly as possible.

Find out before the customer does

Whether the customer is internal or external, monitors should be tuned so the on-call team can respond and repair before those relying on the service have noticed there's a problem.

Every high-priority alert should be actionable

Alert fatigue is real. Sure, send some informational-but-not-actionable alerts to a chat channel.

But if the the on-call team is getting woken up and interrupted when there's not any real work to do, that's a problem. Re-evaluate:

- Can the system or alert be tuned so that alerts are actionable?

- Do we need this alarm at all?

- Should the alert threshold be temporarily raised?

- Could the alert be temporarily disabled, with a reminder set with an alert set when the issue is expected to be fully resolved?

Critical systems should be redundant

A lot of high-priority emergencies can be converted into low-priority situations if there's a policy and practice that all critical systems are redundant.

For non-critical servers, use a feature like the one Amazon Web Services offers to detect when the underlying hardware fails. It can then automatically re-provision new hardware and reboot the server– That's exactly what an on-call human would do in that situations, so automate the fix.

The first responder should be able to resolve most issues

For alerts about a particular app, someone familiar with the app should be on-call to support it. New members of the on-call time can shadow more experienced members to gain experience before taking solo duty.

There should be a secondary on call

In the event the the primary doesn't respond in a timely manner, alerts should roll over to someone else. Clearly spell out the responsibility of roles. The primary should be expected to handle the pages or otherwise made arrangements in advance if there's a period they aren't able to cover. Two people on-call hoping the other will take a page is not a strategy.

Have a runbook that documents possible alerts and common responses to them

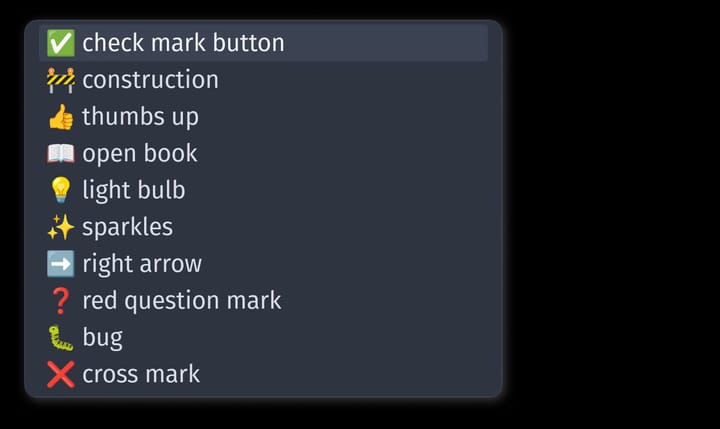

Ideally for every issue that would be some way to prevent the issue from happening or automate a fix for incidents. For the rest, some docs and checklist is the next best thing. I've used a Google Doc organized by server or service. For each, there's a brief Context section to remind what the thing does, a Contact section for service experts to escalate to. In some cases, escalations to these folk can be automated with the paging service. Finally, if there any special notes about common alerts and resolutions. For example:

Blog server: High CPU usage on this server often indicates WordPress is getting attacked again. Consider blocking the IP addresses involved at the firewall.

Even better: Use the tools in services like PagerDuty to include or link the documentation you need for each alert right into the alert itself.

Production incidents should be debriefed

Ah, there's nothing quite like the team bonding comes form living through a fire-fight to get a service back online together. Amirite?

To minimize reunions of your firefighting brigade, learn from mistakes made and pro-actively take steps to minimize recurrence. When there's a significant outage, schedule production incident debrief within a week or two of the incident. The focus is forward-looking to improve systems. It is not a blame session. Use a standard template and time-box the meetings to keep things moving. Thirty minutes is often enough. Here are prompts I've used before in production incident debrief template that have stood the test of time:

- Incident Summary and Timeline

- Actions Already Taken

- What went well?

- How can we prevent similar incidents in the future?

- How can we respond more efficiently and effectively?

- What follow-up actions should be taken?

Have a person close to the incident write up the timeline and run the meeting. Invite all relevant stakeholders for the incident.

Everything else matters too

To spell out everything else that could improve the quality of life of on-call responders would to be describe an entire well-managed IT department.

There will always be inconveniences with being on-call. With some solid first principles and a commitment to continual improvement, there's hope of getting to the point where the number alerts are minimal.. and actionable.

Comments ()